The OpenAI Completion interface can then be used to create predicted completions based on the input prompt. For these next examples, we will use the DaVinci 3 model to create completions from a given prompt.

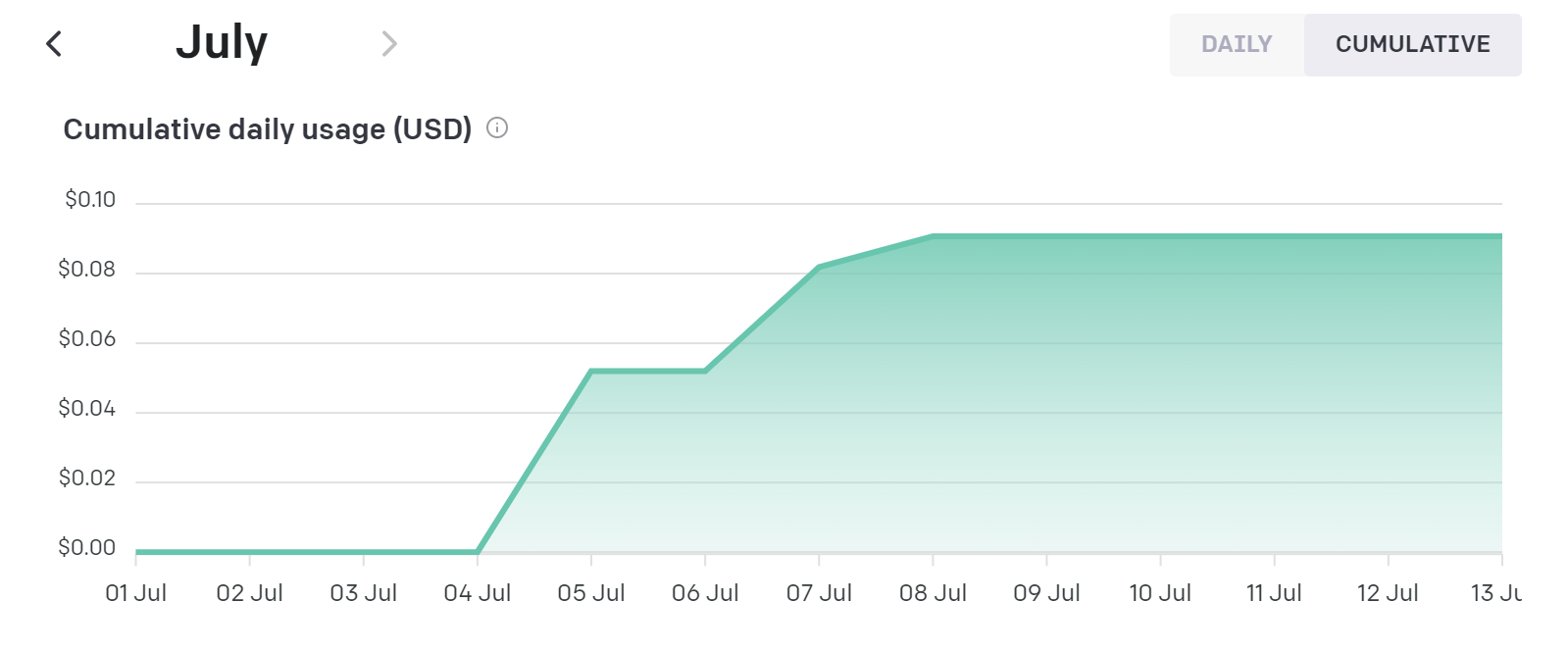

Note> The API explicitly adds the burden of considering model dependent cost and rate limit considerations for every call The cost can be checked under your OpenAI account. An example snapshot is shown below: